From Language Models to Models of Mind

Apr 14, 2024 by Shuo-Fu "Michael" Chen

Since OpenAI’s release of ChatGPT[1] (GPT-3.5) and GPT-4[13], evaluations of language models have shifted from using traditional linguistic metrics, such as syntactic correctness, semantic coherence, fluency, etc., to using tasks and metrics far beyond language, such as reasoning and logic, math and arithmetic, SAT and GRE, bias and discrimination, ethics and morality, wit and humor, etc. Large language models (LLMs) have acquired diverse human-like abilities with performance comparable to an average human adult, which is referred to as artificial general intelligence (AGI). This raises the questions of what constitutes general intelligence, how is general intelligence formed, and how can AGI be measured? If AGI can simply be grown out of the scale of a model and the size of the training data, it will eventually surpass human intelligence. If AGI can exceed human intelligence, how can human design an encompassing metric to evaluate an AGI that is superior to human intelligence? In hope to answer these questions, this survey reviews recent progress in building and using LLMs, with focus on key methodological advancements in three areas: (1) foundation models, (2) fine-tuning strategies, and (3) prompting strategies.

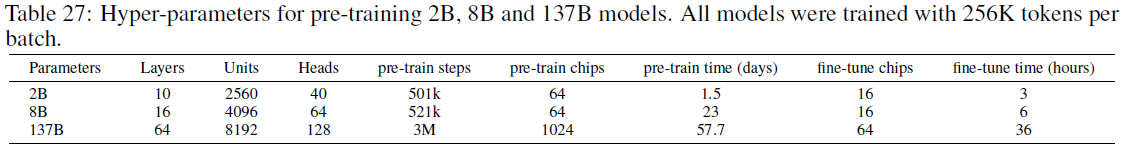

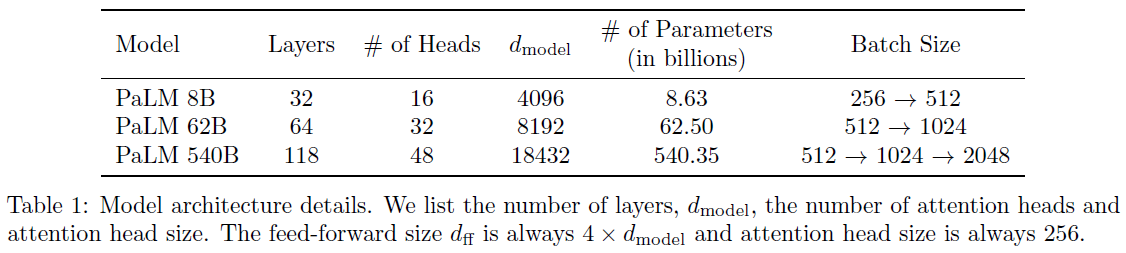

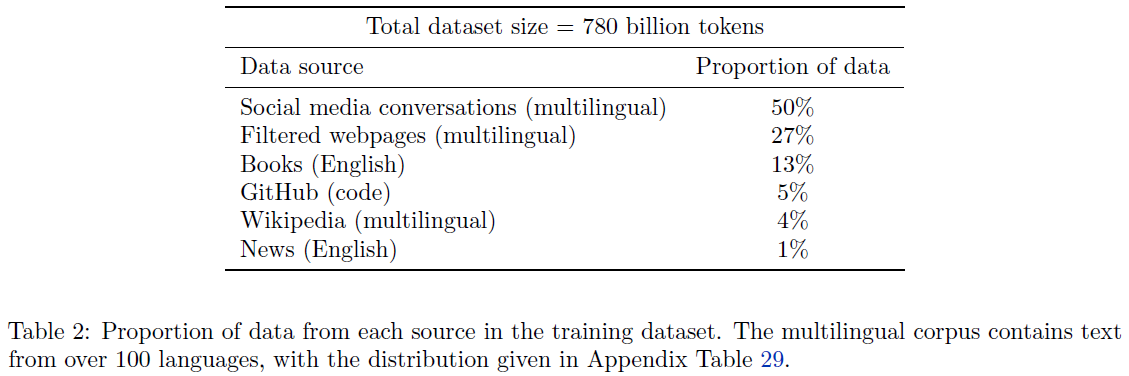

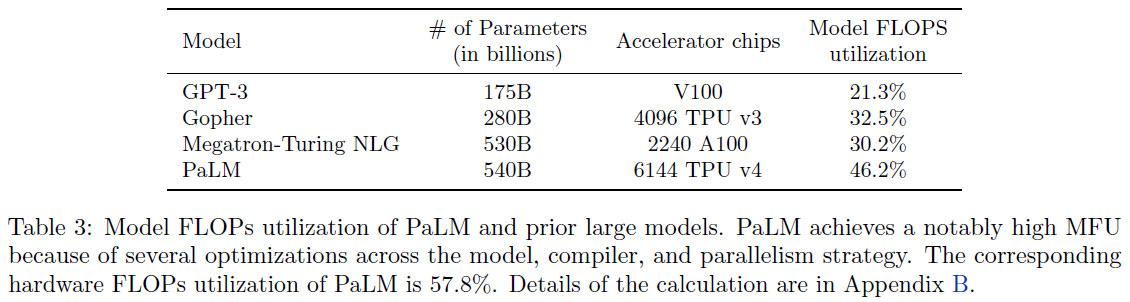

Foundation Models. Foundation model[104] refers to any model that is trained on massive, diverse datasets and can be applied to a wide range of downstream tasks; examples include BERT and GPT-3. The most consequential advancement in foundation models is the drastic scaling-up of model sizes to trillions of parameters that took thousands of GPU/TPU running continuously for a number of weeks to train. The exact sizes of commercially deployed AGI models have been withheld as trade secrets by both OpenAI and Google. Various parallelism techniques have enabled training very large neural networks over thousands of GPU/TPU, including data parallelism, pipeline parallelism, tensor parallelism, and mixture-of-experts. In addition, many memory efficient strategies, such as selective activation recomputation, mixed precision training, offloading unused data, memory efficient optimizers, and compression, have also significantly accelerated training. While training a large neural network is done by a single program, a multi-tenant capability has been developed in a large scale TPU orchestration system[24] that enables time-multiplex TPUs between concurrent programs submitted by different clients (e.g. several researchers concurrently fine-tuning a foundation model for different tasks, using the same TPUs to hold the fixed foundation model layers). Scaling law studies[14][15][13] have shown that final training loss of a foundation model follows a power relation to parameter size, number of training tokens, and training compute. For a given compute budget, the optimal token-to-parameter ratio is shown to be approximately 20, and the number of training tokens and the model size should be scaled up equally for compute-optimal training[30]. Higher training data quality also plays an important role in achieving higher performance, compared to much larger models trained with unfiltered data[44]. In addition to the drastic scaling-up, mixture of pre-training objectives (UL2 objective), from T5-like span corruption objective to GPT-like standard causal language modeling and some others in between, have been shown to significantly improve model performance[33]. When UL2 objective is used to continue training a state-of-the-art large language model for a few more steps, the scaling properties of the large language model improves substantially[60]. Large language models (LLMs) have been shown to acquire emergent abilities that are not present in smaller models[105], such as few-shot prompting, multi-step reasoning, instruction following (without few-shot exemplars), computer programs execution, and model calibration (ability to predict which questions the model will be able to answer correctly). The number of model parameters at which the abilities emerge ranges from tens of billions to hundreds of billions, dependent upon the model and the ability. On the other hand, it has been shown that publicly deployed foundation models (e.g., GPT-3.5 and GPT-4) exhibit unstable quality within a relatively short amount of time[152], where improving the models’ performance on some tasks can have unexpected side effects on their behavior in other tasks.

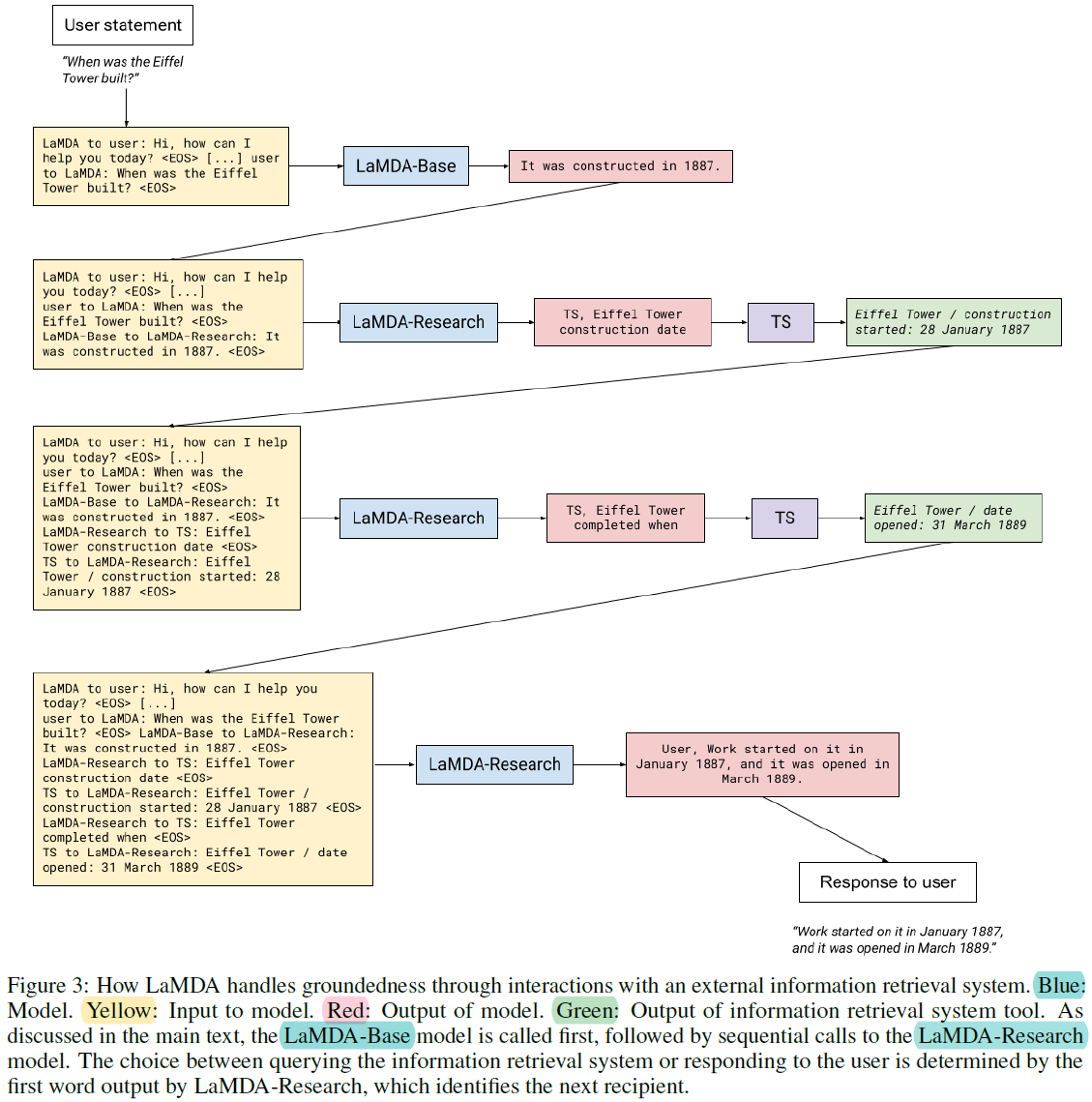

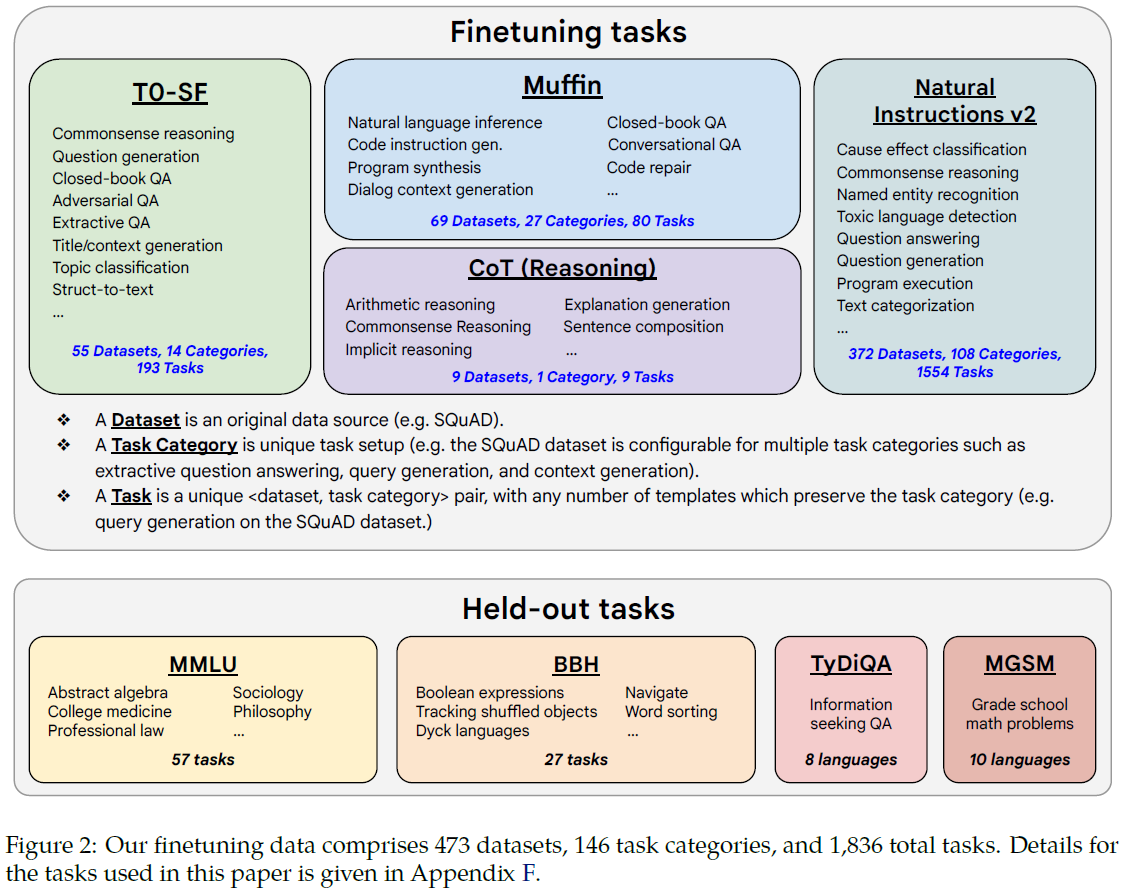

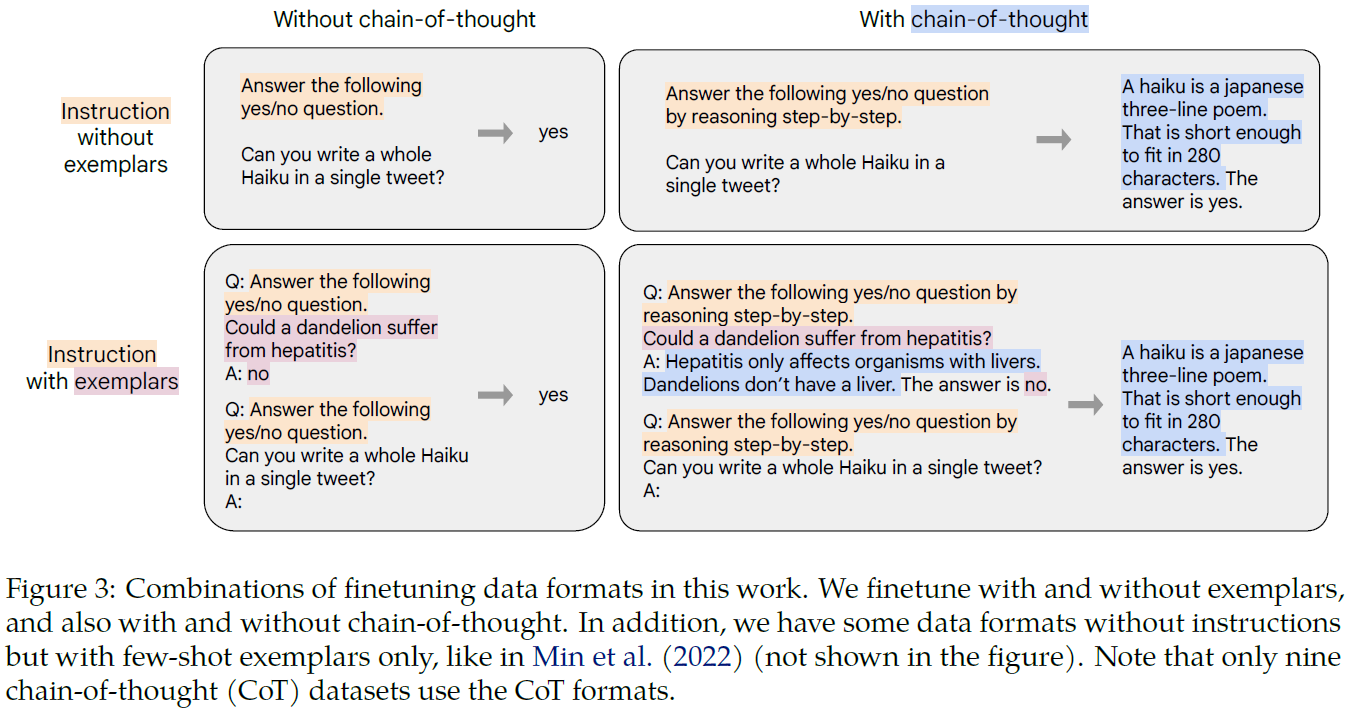

Fine-tuning Strategies. Unlike self-supervised learning in pre-training, fine-tuning involves supervised learning on specific tasks using small amount of data, typically < 1% of the pre-training data size and amount of compute[35]. Three fine-tuning strategies have played crucial roles in building LMs that are more suitable for public deployment: instruction tuning, reinforcement learning from human feedback (RLHF), and tool usage learning. Instruction tuning[5] refers to finetuning LMs on a mixture of large variety of (> 60) NLP tasks described via natural language instructions. The LMs learn to follow users’ instructions to perform corresponding tasks (including unseen tasks) at inference time in zero-shot scenarios (without exemplars). The performance improvement from instruction tuning is dramatically increased by increasing the number of tasks and the size of the model, as well as adding chain-of-thought datasets into the finetuning mixture[35]. However, human-written instruction-following data are costly and often limited in quantity, diversity, and creativity. These issues can be alleviated by Self-Instruct method[51], where an LLM (e.g. GPT-3) is prompted with some demonstrations of instruction-following data, sampled randomly from a small seed set of tasks, to generate new instructions and corresponding instances (inputs and outputs), with invalid and similar ones filtered out. The LLM fine-tuned by the new instruction-following data generated by itself has been shown to gain substantial performance improvement. Later studies have used stronger LLMs, such as GPT-3.5[106] and GPT-4[107], to generate instruction tuning datasets for finetuning weaker LLMs, such as LLaMA. For a general-purpose, text-based AI model to be deployed to public, its behavior needs to be aligned with (non-expert) human preferences and values (helpful, honest, and harmless) so that its negative societal impacts are alleviated[108]. Human preferences are collected from human-labeled comparisons between pairs of LM outputs, which are then used to train a separate preference model, a.k.a. reward model (RM). The RM is in turn used as a reward function in reinforcement learning[2][109] via proximal policy optimization[3] to finetune the LM to act as helpful and harmless assistants. Another important fine-tuning strategy is to train a foundation model to use specialized tools[148][78][150][153] (such as an information retrieval system, a language translator, a calculator, etc.) to improve factual groundedness, accuracy, efficiency, and automation in problem-solving.

Prompting Strategies. Prompting simply refers to using text input, at inference time, to a pretrained LM as a form of task specification[110]. The LM is conditioned on the prompt that is a natural language instruction and/or a few demonstrations of the task (a.k.a. few-shot) and is then expected to complete further instances of the task simply by predicting what comes next. The term “in-context learning” refers to developing a broad set of skills and pattern recognition abilities within the parameters of the model during pre-training and then using these abilities at inference time to rapidly adapt to or recognize the desired task specified in a prompt[110]. The “in-context learning” does not involve any parameter update (i.e. “learning”) at inference time; it is a learned ability to reason by analogy. Many human-like abilities of “in-context learning” have been discovered by complex prompting strategies that can be grouped into 5 categories:

(1) Prompting for Reasoning and Thought. A chain of thought refers to a series of intermediate reasoning steps, and chain-of-thought (CoT) prompting[29] refers to using a few chain of thought demonstrations as exemplars in prompts. CoT prompting reveals that complex arithmetic and symbolic reasoning abilities emerge naturally in sufficiently large LMs. CoT prompting uses naive greedy decoding, which may miss valid reasoning path. Self-consistency[36] decoding strategy first samples a diverse set of reasoning paths, and then selects the most consistent answer by marginalizing out the sampled reasoning paths. Self-consistency boosts the performance of chain-of-thought prompting with a striking margin on a range of popular arithmetic and commonsense reasoning benchmarks. CoT prompting tends to perform poorly on the tasks that requires solving problems harder than the exemplars shown in the prompts. To overcome the challenge of easy-to-hard generalization, least-to-most prompting strategy[111] breaks down a complex problem into a series of simpler subproblems and then solve them in sequence. Solving each subproblem is facilitated by the answers to previously solved subproblems. Least-to-most prompting has been shown to be capable of generalizing to more difficult problems than those seen in the prompts. Although original CoT prompting uses few-shot exemplars, reasoning abilities can also be elicited by simply adding “Let’s think step by step” before each answer without any examples in the prompt, which is termed Zero-shot-CoT[68]. Prompts that encourage open-ended answers are found to be more effective than prompts that restrict the model output to particular tokens[112]. This property is exploited to transform task inputs to the effective, yet imperfect, open-ended question-answering-formatted prompts by recursively using the LLM itself. The Ask Me Anything (AMA) Prompting[112] strategy applies these prompts to collect several noisy answers and then uses weak supervision to combine the several noisy answers to produce the final answer. In compositional reasoning tasks, the overall solution depends on correctly composing the answers to subquestions. The fraction of questions for which the model correctly answers individual subquestions but not the compositional question is termed the compositionality gap. To narrow the compositionality gap, self-ask prompting[113] improves upon CoT prompting by asking the model to explicitly state the next follow-up question it wants to ask before answering it, as well as inserting scaffolds like “Follow up:”. Decomposed Prompting[114] is designed to solve complex tasks by decomposing them (via prompting) into simpler sub-tasks and delegating the sub-tasks to sub-task specific LLMs, with both the decomposer and the sub-task LLMs having their own few-shot prompts. To eliminate manual efforts of hand-crafting task-specific exemplars in CoT prompting, an automatic CoT (Auto-CoT) prompting[115] method first partitions questions of a given dataset into a few clusters, and then selects a representative question from each cluster to generate reasoning chains for demonstrations one by one using Zero-Shot-CoT. To improve performance of numerical/arithmetic reasoning, program-of-thoughts (PoT) prompting[116] uses language models (mainly Codex, code-davinci-002) to express reasoning steps in programming language statements (Python programs) that can be executed by a Python interpreter, thus decoupling complex computation from reasoning and language understanding. LMs are confined to token-level, left-to-right decision-making processes during inference and can fall short in tasks that require exploration or strategic lookahead. Tree of Thoughts (ToT)[117] actively maintains a tree of thoughts, where each thought is a coherent language sequence that serves as an intermediate step toward problem solving; thus, it enables consideration of multiple different reasoning paths, evaluations of choices to decide the next action, and looking ahead or backtracking when necessary to make global choices. ToT significantly enhances language models’ problem-solving abilities on tasks requiring non-trivial planning or search. The sequential decoding of LLMs is one of the major causes of the high inference generation latency. To reduce the latency, Skeleton-of-Thought (SoT)[118] first guides LLMs to generate the skeleton of the answer, and then conducts parallel API calls or batched decoding to complete the contents of each skeleton point in parallel. To improve upon CoT and ToT, Graph of Thoughts (GoT)[119] considers the information generated by an LLM as an arbitrary graph, where units of information (“LLM thoughts”) are vertices, and edges correspond to dependencies between these vertices. GoT enables combining arbitrary LLM thoughts into synergistic outcomes, distilling the essence of whole networks of thoughts, or enhancing thoughts using feedback loops.

(2) Prompting for Iterative Self-Feedback and Refinement. To reduce the cost of manually generating intermediate reasoning (“rationales”) for CoT, the Self-Taught Reasoner (STaR)[120] iteratively bootstraps the ability of a LLM to generate high-quality rationales by first few-shot prompting the LLM to self-generate rationales and then using those rationales that lead to correct answers (and re-generated rationales from a hint of the correct answer when the model failed to reach correct answer initially) to fine-tune the LLM. STaR significantly improves performance on multiple datasets compared to a model fine-tuned to directly predict final answers, indicating that a model can improve itself by learning from its own generated reasoning. Similar self-improving capability is shown in coding LMs[121], where coding LMs are used to generate new programming puzzles and solutions and then fine-tuned by python-interpreter-verified puzzle-solution pairs. In experiments on publicly-available coding LMs, test accuracy of models fine-tuned on verified synthetic puzzles more than doubles. To generate longer stories (> 2,000 words) with better plot coherence and premise relevance, a Recursive Reprompting and Revision framework (Re\(^3\))[122] was developed by prompting a LM to construct a structured overarching plan, and generating story passages by repeatedly injecting contextual information from both the plan and current story state into a language model prompt. Different continuations are then reranked for plot coherence and premise relevance, and the best continuation is edited by an Edit module for factual consistency. Iterative refinement approach has also been applied to LLMs different from the generator, such as Self-Correction[123] approach that uses a separate corrector to iteratively correct imperfect sequence generations. To enable LLMs to learn from trial-and-error, a verbal reinforcement learning system, named Reflexion[88], is developed, which includes three distinct models: an Actor that generates text and actions; an Evaluator model that scores the outputs produced by the Actor; and a Self-Reflection model that generates verbal reinforcement cues (maintained in an episodic memory buffer) to assist the Actor in self-improvement. Reflexion obtains significant improvements over a baseline agent across diverse tasks (sequential decision-making, coding, language reasoning). It has been shown that LLMs can be fine-tuned to acquire self-critiquing ability[124] that can find errors in their own output. The self-critiquing ability has been exploited in the RCI (Recursive Criticism and Improvement)[125] prompting scheme that works by first having the LLM generate an output based on zero-shot prompting, then prompting the LLM to identify problems with the given output, and finally prompting the LLM to generate an updated output. RCI prompting outperforms CoT prompting with external feedback on a suite of reasoning tasks and RCI combined with CoT performs better than either separately. To improve the reliability of intermediate representations (inference rules, explanations, or reasoning steps) of multi-step reasoning tasks, a REFINER framework[126] has been developed, which includes two models: a generator learns to solve the task by first generating the intermediate reasoning steps, and a critic provides structured feedback to the generator about errors in the intermediate steps. The critic is independently trained with pairs of incorrect intermediate representations and structured feedback on fine-grained reasoning errors. The critic interacts with the generator LM, offering fine-grained feedback both during the training of the generator and during inference. Refiner shows significant improvements over baseline LMs of comparable scale on three diverse reasoning tasks. In SELF-DEBUGGING approach[127], a coding LLM is taught to debug its predicted program via few-shot prompting. At each debugging step, the model first generates new code, then the code is executed with unit tests, and the model explains the code. The code explanation and the execution results (when unit tests are available) constitute the feedback message, which is then sent back to the model to perform more debugging steps. The model is able to identify its mistakes by explaining the generated code in natural language and achieves the state-of-the-art performance on several code generation benchmarks. A generate-and-edit approach, named Self-Edit[128], has been developed for coding LLMs on competitive programming tasks. For a problem description in the Self-Edit, the program generated by the coding LLM is executed on an example test case and the execution results is used to construct the supplementary comments. Then, a fault-aware code editor model is trained to refine/edit the code based on the problem description, generated code, and supplementary comment. A verify-then-correct approach, named CRITIC[129], allows LLMs to verify their outputs by interacting with external tools to generate critiques and to self-correct their outputs based on the received critiques. CRITIC consistently enhances the performance of LLMs on free-form question answering, mathematical program synthesis, and toxicity reduction, highlighting the importance of external feedback in promoting self-improvement. A single coding LLM can play dual roles in a two-step code generation-refinement pipeline, as in SelfEvolve[130], where LLMs first play the role of a knowledge provider and generate corresponding knowledge from input prompts, then generate intermediate code conditioned on the knowledge; and then LLMs play the role of a self-reflective programmer to perform revision for the generated code based on the feedback (error message) thrown by the interpreter. A “scaffolding” program refers to a program (typically written by humans in a programming language such as Python) that makes multiple, structured calls to a language model to generate better outputs/solutions for some algorithmic tasks. A Self-Taught Optimizer (STOP) method[131] is developed to recursively self-improve a scaffolding program to solve problems, where the code of a seed improver program is iteratively optimized using language model calls and how well the improver’s code is optimized for downstream tasks is evaluated by a meta-utility function. With GPT-4, but not GPT-3.5, STOP improves mean downstream performance from iterations of self-improvement.

(3) Prompting for Role-Playing and Collaboration. The fact that LLMs already capture a large variety of social behaviors in their training data has been exploited in building social simulacra[133], where prompt chains[132] for the design of a social space as input to GPT-3 are used to generate a large number of member personas and a wide range of social interactions between them. To study autonomous corporation among multiple communicative agents (e.g., LLMs) for complex task-solving, a Cooperative Role-playing Communication (CAMEL)[134] framework is developed, where a role-playing session is instantiated from an idea and selected roles by humans; a task-specifier agent will provide a detailed description to make the idea specific; an AI assistant role and an AI user role will be assigned to the assistant agent and the user agent correspondingly; finally, the AI user is responsible for providing instructions, and the AI assistant is expected to respond with a solution that fulfills the instructions. An Inception Prompting is introduced, where human-crafted prompting occurs solely at the beginning of role-playing, for task specification and role assignment; then, once the conversation phase commences, the AI assistant and AI user prompt each other automatically in a loop until termination. A similar study with 25 agents, named Generative Agents[135], supplements the powerful prompting capabilities of LLMs with a long-term memory module, a reflection module, and a planning module to simulate believable human behavior. Users of the interactive simulacra can observe and intervene as agents plan their days, share news, form relationships, and coordinate group activities. It has been shown that toxicity of ChatGPT can increase up to 6x, depending on the persona assigned to it[136]. For example, significantly more toxic language can be generated using ChatGPT by setting its system parameter (i.e., persona) to that of Adolf Hitler. A 3-agent (a buyer, a seller, a critic) negotiation game has been used to study whether multiple LLMs can autonomously improve each other[137]. The results showed that only strong and well-aligned models (like GPT-4 and Claude-v1.3) can continuously improve from iterative AI feedback. An automatic and generalized prompting method, named ExpertPrompting[138], has been developed to instruct LLMs to act like distinguished experts using automatically synthesized descriptions of the expert identity for each specific instruction. ExpertLLaMA, the LLaMA trained by ExpertPrompting-elicited instruction-following data, outperforms existing open-source opponents and achieves 96% of the original ChatGPT’s capability. In contrast to elevating individuality of LLMs by role-playing in social interactions, many studies have shown that corporation between multiple instances of LLMs results in more reliable answers than those by individual instances. ChatLLM Network[139] uses a two-layer structure (3 ChatGPT3.5 in the first layer and 1 ChatGPT3.5 in the second layer) and a novel language-based feedback mechanism to optimize the network that achieves more objective and comprehensive decision-making. Inter-inconsistency (INCON)[140] among multiple LLMs, defined as the ratio of inconsistent predictions out of a test dataset, has been used to study collaboration in debate scenarios. The results show that LLMs with comparable abilities can effectively and performantly achieve a shared goal; on the other hand, for LLMs with mismatched abilities, the superior LLMs are more likely to insist on their perspectives and dominate the debate, while weaker ones are more likely to compromise and change their viewpoints. Another multi-agent debate study[141] shows that the converged single shared answer after multiple rounds of debate significantly enhances mathematical and strategic reasoning across a number of tasks, improves factual validity, and reduces fallacious answers and hallucinations. Multi-agent debate has also been used to address the Degeneration-of-Thought (DoT) problem in self-reflection[142], which is defined as once the LLM has established confidence in its answers, it is unable to generate novel thoughts later through self-reflection even if the initial stance is incorrect, by correcting and complementing the other debater, and thus encouraging divergent thinking in LLMs. To exploit the diverse strengths and weaknesses of many open-source LLMs, an ensemble method, named LLM-Blender[143], is developed to leverage different optimal LLMs for different inputs. LLM-Blender consists of two modules: a PairRanker module that ranks the two outputs generated by a pair of LLMs and determines the top-k outputs after N(N-1) iterations for N LLMs and a GenFuser module that merge the top-k output into an enhanced output as the final response. LLM-Blender significantly outperforms individual LLMs and baseline methods across various metrics, although the pairwise ranking has \(O(n^2)\) low-efficiency. MetaGPT[166] models a multi-agent system for Python program generation as a simulated software company, where each agent plays a specific role, including product manager, architect, project manager, engineer, and QA engineer, and working in a sequential manner like standard workflow in software development. Each role is specified with a profile, goal, and constraints, and initialized with specific context and skills. The communication between agents does not use natural language, but uses structured messages with role-specific schema and format, including documents and diagrams. The messages are stored in a shared global message pool and agents publish to and subscribe from the pool based on their role profiles. MetaGPT uses an executable feedback mechanism to improve code generation quality during runtime. MetaGPT achieves state-of-the-art performance on multiple collaborative software engineering benchmarks. To emulate human thinking process in solving complex reasoning problems, Cumulative Reasoning (CR) method[144] uses three distinct types of LLMs (AI Agents): a proposer that suggests the next step based on the current context, one or more verifiers that scrutinize the accuracy of the step put forward by the proposer and add correct proposition to the context, and a reporter that decides when to stop and report the solution. The interplay among the three roles in CR allows for a more effective accumulation and verification of intermediate results, facilitating a deeper and more precise reasoning process. To simulate problem-solving process of a human group as a sequence of iterative stages, AgentVerse[145], a general multi-agent framework, splits the problem-solving process into 4 stages: (1) Expert Recruitment that determines and adjusts the agent group’s composition based on the ongoing problem-solving progression, (2) Collaborative Decision-Making that engages the selected agents in joint discussions to devise problem-solving strategies, (3) Action Execution where agents interact with their environment to implement the devised actions, (4) Evaluation that assesses the differences between the current state and desired outcomes, and gives feedback to the next iteration for further refinement when the current state is unsatisfactory. A similar multi-agent collaboration system for automated task-solving, named AutoAgents[146], divides task-solving process into two stages: (1) Drafting Stage that uses three predefined agents (Planner, Agent Observer, and Plan Observer) to generate a customized agent team and an execution plan for the given task, and (2) Execution Stage that refines the plan through inter-agent collaboration and feedback, and produces the final outcome. The execution plan comprises two actions of task execution: self-refinement by a single agent and collaborative refinement by multiple agents. A predefined Action Observer acts as the team leader to coordinate the execution plan by accessing short-term memory, long-term memory, and dynamic memory based on agent units. To design a multi-agent collaboration framework that is Task Agnostic, Efficient (in reaching consensus), and capable of automatic Agent Team Optimization, Dynamic LLM-Agent Network (DyLAN)[147] framework organizes agents into a multi-layered feed-forward network with dynamic architecture by introducing inference-time agent selection and early-stopping mechanism. DyLAN includes an automatic agent team optimization algorithm based on an unsupervised Agent Importance Score. DyLAN demonstrates high accuracy, efficiency, and stability in general reasoning, arithmetic reasoning, and code generation tasks.

(4) Prompting for Integration with External Tools and APIs. Capabilities of LLMs can be greatly enhanced by accessing external tools or APIs for information retrieval and math formula calculation, as shown by the Tool Augmented Language Models (TALM)[148] that is built by finetuning pretrained T5 models (220M~3B) in an Iterative Self-Play Algorithm. A few-shot prompt-based approach named ReAct[86] has shown that LLMs (PaLM-540B) can be used to synergize between reasoning and acting (on a simple Wikipedia API) for question answering and fact verification tasks. Both finetuning-based approach and prompt-based approach continue to be used in later studies for integrating LLMs with external tools and APIs, with the former being constrained to a small set of task-specific tools and the latter being more flexible in adapting to new tools in a plug-and-play fashion. Toolformer[78] exploits the in-context learning ability of LLMs (GPT-J-6.7B) to generate a large number of potential API calls, executes these API calls, uses a self-supervised loss to filter out unhelpful API calls, and finetunes the LLM on this API calls dataset. Toolformer includes 5 tools: a calculator, a Q&A system, a search engine, a translation system, and a calendar. TRICE (Tool leaRning wIth exeCution fEedback)[156] exploits tool execution results as training data for a two-stage (instruct-tuning and reinforcement learning) training strategy to make LLMs better at deciding when to use tools and selecting the most appropriate tools for the task at hand. ART (Automatic Reasoning and Tool-use)[149] uses InstructGPT (text-davinci-002) to automatically decompose input task instance into a sequence of sub-steps, by retrieving specifically formatted demonstrations of similar task from a task library. Some of these sub-steps contain symbols corresponding to tools in a tool library (e.g. SerpAPI for Google search, Codex model for code generation, Python environment for code execution). The task and tool libraries can be updated with new demonstrations to improve performance on any specific task or to incorporate new tools, enabling easy extensibility in ART. TaskMatrix.AI[150] focuses more on a unified API documentation schema in an API platform that enables API developers to easily register, update and delete their APIs. TaskMatrix.AI consists of the following four key components: (1) Multimodal Conversational Foundation Model (GPT-4), which understands user instructions and multimodal contexts and generates executable codes. (2) API Platform, which provides storage of APIs and allows API owners to manage their APIs. (3) API Selector, which identifies and selects the most suitable APIs from API platform. (4) API Executor, which can execute the generated action codes by calling the relevant APIs and return the intermediate and final execution results. In TaskMatrix.AI, RLHF is used to finetune the foundation model and the API selector. HuggingGPT[151] focuses more on leveraging the large number of expert AI models in the public machine learning community, Hugging Face, to cooperatively solve complicated multimodal tasks. HuggingGPT uses an LLM (e.g., ChatGPT) as the core controller and the expert models as the executors in a four-staged workflow: (1) Task Planning, in which the LLM decomposes the user request into a task list and determines the execution order and resource dependencies among tasks; (2) Model Selection, in which the LLM selects appropriate models to solve the planned tasks based on the description of expert models on Hugging Face; (3) Task Execution, in which each selected model is executed and the results are returned to the LLM; (4) Response Generation, in which the LLM integrates the inference results of expert models and generates a summary of workflow logs to respond to the user. Another similar prompt-based approach named Chameleon[154] is comprised of an LLM-based planner and a module inventory that consists of a set of pre-built modules, each corresponding to a tool (13 tools of 6 tool types in the inventory). The planner is prompted with a planning task instruction, the descriptions of modules with their corresponding constraints (e.g., the concurrent relations and sequence orders of modules) for the plan generation, as well as few-shot demonstration examples. The planner then decomposes the task into a plan of sub-tasks by selecting a set of modules that can be executed sequentially to solve the task via generating a program in a natural-language-like format. The corresponding modules for each sub-task are then executed sequentially. The output of each sub-task is used to update the input and the cached information of the execution history for the next module. The output of the last module is used as the final response to the original request. Although prompt-based approach has the advantage of quick adaptability, it suffers from limited context length, making it impossible to demonstrate massive tools in the context. Furthermore, it can be challenging to master new and unfamiliar tools simply with few-shot examples. To address these limitations, ToolkenGPT[155] represents each tool as a new token (“toolken”) to augment the vocabulary, and each toolken is parameterized as a toolken embedding vector. The toolken embeddings are trained with pre-trained frozen LLMs by supervised learning on a specially designed training dataset containing toolkens placed at positions for a tool call. The training process is consistent with the inference in the “reasoning mode”, where the LLM generates text as usual, except that any plugged-in toolkens are also considered for the next token generation. Once a toolken is predicted, the LLM switches to the “tool mode”, which provides a few demonstrations of the same tool to complete the arguments. Then, the tool call is executed, and the result is sent back to the text to continue the reasoning mode until the final answer is generated. ToolkenGPT offers better grounding for 58 grounded actions and objects than previous in-context learning and specialized decoding methods. Gorilla[157] is a LLaMA-7B-based model, finetuned on a a comprehensive dataset consisting of 16,450 {instruction, API} pairs, where instructions are generated by GPT-4 using Self-Instruct paradigm[51] and 1,645 APIs are derived from the model cards of 925 models from HuggingFace, 626 models from TensorFlow Hub, and 95 models from Torch Hub. Gorilla supports two modes, with retrieval and zero-shot (without retrieval), in both training and inference. For the “with retrieval” mode, the right API is retrieved from the API database; for the “zero-shot” mode, the right API is determined by the Gorilla. LATM (LLMs As Tool Makers)[22] uses LLMs for not only utilizing tools but also creating their own reusable tools for problem-solving. LATM consists of two phases: (1) Tool Making phase employs a more powerful LLM, such as GPT-4, as the tool maker to create a generic and reusable tool, implemented as a Python function, from a few demonstrations of a task. This phase is further divided into 3 sub-stages: (i) Tool Proposing, where the tool maker attempts to generate a Python function that produces the behaviors of 3 demonstrations and makes another attempt if the proposed tool is unexecutable or encounters errors; (ii) Tool Verification, where the tool maker generates unit tests using 3 validation samples and subsequently executes these tests on the proposed tool; and (iii) Tool Wrapping, where the function code is wrapped up with demonstrations of how to convert a task into a function call and this final product is then ready for use by the tool user. (2) Tool Using phase employs a lightweight and cost-effective model, such as GPT-3.5 Turbo, as the tool user to utilize the verified tool to solve various instances of the task. To handle stream of tasks arriving in sequence, a third LLM, the dispatcher, is introduced to determine whether to engage the tool user or tool maker for each incoming task. The dispatcher maintains a record of existing tools produced by the tool maker and determines if there is a suitable tool for each incoming task. When a suitable tool exists, the dispatcher passes the task and its corresponding tool to the tool user for task resolution. If no appropriate tool is found, the new task is solved with a powerful model and the instances from a new task are then cached until sufficient cached instances are available for the tool maker to make a new tool. GPT4Tools[158] enables open-source LLMs, such as OPT-13B, LLaMA-13N, and Vicuna-13B, to use 31 multimodal tools for solving a range of visual problems, including visual comprehension and image generation. The method first constructs multimodal tool-related instruction-following dataset by prompting ChatGPT (GPT-3.5-turbo), as the teacher model, with image content and definition of tools. The dataset is then used to finetune open-source LLMs using their original auto-regressive training objective with LoRA (Low Rank Adaptation) technique[159], which freezes the pre-trained model weights and optimizes injected rank decomposition matrices of the query/key/value/output projection matrices in the self-attention module. AssistGPT[160] is a prompt-based approach to integrate LLMs with 13 multi-modal tools, which consists of 4 collaborating parts: Planner, Executor, Inspector, and Learner (PEIL). The Planner, implemented with GPT-4 API, takes inputs from an Instruction Prompt (consisting of the [Tool Set Illustration] and [In-Context Example]), Input Query, and the Summary of Visual Input (created by Inspector), and then generates an appropriate output for the next step, which consists of Thought (a language phrase indicating what should be done next) and Action (a string following the code-style template Module_Name(<text_query>, <visual_index>) to specify which external tool to call and what arguments to provide). The Executor takes the Action as input, then call a module to produce the output in 3 steps: (1) Validation Check, which determines whether the Action is executable and returns an error message if the Action includes errors; (2) Module Execution, which uses a simple rule-based function to map Action to executable codes and executes it to obtain the final result; (3) Post-processing, which translates the final result into natural language format that is referred to as Observation and sends intermediate results, such as segmented video or cropped image region, to the subsequent visual outcome manager (i.e. Inspector). The Inspector records the metadata of each visual element, which includes its type (image or video), source (provided by the user or generated by the system), a brief description of the content (obtained from the caption model, or the title of an online video), and, for video, the duration and whether it contains audio and subtitles. The Inspector also generates a Summary from the metadata of the intermediate outcome and appends it to the reasoning history of the Planner. The Learner includes an evaluator implemented by the LLM, which operates in two modes: self-assessment mode (activated when there is no user feedback or ground truth) and ground-truth comparison mode (activated when ground truth is available). Self-assessment mode takes the reasoning trace and the results of each step as input and assesses whether the reasoning is complete, consistent, and adhere to the required format. Ground-truth comparison mode evaluates whether the AssistGPT’s prediction is semantically consistent with the provided ground truth. When the response is not satisfactory, AssistGPT will repeatedly attempt to provide the answer until either passes the self-check, or correct answer is given or a predefined maximum number of attempts is reached. ToolLLM[161] is a general tool-use framework of data construction, model training and evaluation to facilitate tool-use capabilities of open-source LLMs. An instruction tuning dataset, ToolBench, for tool learning is constructed in 3 stages: (1) API collection, (2) instruction generation, and (3) solution path annotation. APIs listed on RapidAPI are filtered for reliability and functionality to retain 3,451 high-quality tools, covering 16,464 APIs. During inference, when the API response length exceeds 2048 tokens, the response is compressed by removing unimportant information and the compressed response is truncated if it is still longer than 2048 tokens. ChatGPT is used to generate instructions and instruction-relevant API pairs using prompts composed of a description of instruction generation task, documentation of each API in a sample of a few APIs, and 3 seed examples randomly sampled from 12 or 36 diverse expert-written examples for single-tool or multi-tool settings, respectively. For multi-tool settings, 2~5 tools from the same category or collection are randomly selected and at most 3 APIs from each tool are sampled to generate intra-category multi-tool instructions or intra-collection multi-tool instructions. After filtering out hallucinated relevant APIs, over 200k qualified instruction-relevant API pairs are collected. For a given instruction, ChatGPT is prompted to search for a valid action sequence, in which, for each action, ChatGPT is prompted to specify which API to use, the specific parameters for an API call, and its “thought”. Leveraging the function call feature of GPT-3.5-Turbo-16K, each API is treated as a special function and its documentation is fed into the ChatGPT’s function field. To expand the exploration of the action space and increase the possibility of finding a valid solution path, a pre-order traversal for a depth-first search-based decision tree is performed to find a valid action sequence. The instruction-solution pairs are used to fine-tune LLaMA 7B model with a context length of 8192 in a multi-round conversation mode to obtain ToolLLaMA. An API Retriever that can retrieve relevant APIs for a given instruction is trained on instruction-relevant API pairs based on BERT-BASE model, which encodes the instruction and API document into two embeddings and determines the relevance of the two by the similarity of these two embeddings. CRAFT[162] is another general tool creation and retrieval framework for LLMs, which constructs a toolset through 4 steps: Generation, Abstraction, Verification, and Deduplication. The Generation step first samples a diverse set of problems from a training dataset of problem-answer pairs, using min-max strategy on sentence embeddings encoded by SimCSE; then, for each problem, GPT-4 is prompted to generate a specific Python solution. The code solutions that produce incorrect outputs are discarded. The Abstraction step aims to promote reusability by instructing GPT-4 to replace all specific variable names with general ones and wrap textual inputs of internal function calls as arguments of the tool, substituting them with more generic counterparts to adapt to similar problems. In addition, GPT-4 is instructed to assign a suitable and general function name and compose a corresponding docstring to elucidate the functionality of created tools. In the Validation step, GPT-4 is used to access the abstract tool function and the tools that fail to derive the correct answers given the original problems are discarded. In the Deduplication step, tools with the same function names and the number of input arguments are deduplicated to only keep the most comprehensive one. At inference time, CRAFT retrieves tools based on multi-view matching, where an evaluated LLM is asked to generate the function names and the docstrings based on the target problem; and then three lists of tools are retrieved from CRAFT toolset based on the similarity between two SimCSE embeddings of function name, docstring, and problem, respectively. The three lists are then aggregated and ranked by their frequency of occurrences; and the top three most frequent tools are retrieved. The retrieved tools that occur only once are filtered out. If the retrieved tool set is empty, then LLMs would directly perform code generation to solve question without invoking task-specific tools. After retrieval, the code snippets of tools are added to the prompt of LLMs for code generation to solve a given question. The retrieved tool functions and LLM-generated code solutions are instantiated into executable code that are executed to obtain the final predictions. Ablation studies showed that CRAFT implemented with more powerful backbone model (GPT-4) substantially outperforms CRAFT implemented with weaker model (GPT-3.5-Turbo); and the performance of CRAFT improves as the toolset size scales up (e.g. from 261 to 525).

(5) Automated Prompting by Autonomous Agents. Prompting can be automatically generated and/or optimized by autonomous agents that are software systems designed to automate task-planning, decision-making, and action-execution when interacting with LLMs and other tools. Autonomous agent approach has been applied in exploring the four aspects of AGI systems described above, such as Auto-CoT[115] for reasoning, Relexion[88] for self-reflection, AutoAgents[146] for multi-agent collaboration, and ART[149] for reasoning and tool-use. Although some studies continue to focus on building autonomous agents optimized for a domain-specific task, more studies have aimed at building task-agnostic and broadly capable autonomous agents. LLM-powered autonomous web navigation on real-world websites has been challenging due to open-ended action space and long and messy HTML documents. To address these issues, WebAgent[164] combines two LLMs: HTML-T5 that decomposes instructions into canonical sub-instructions and summarizes long HTML documents into task-relevant snippets and Flan-U-PaLM[35] that generates Python programs for browser actions. HTML-T5 is based on LongT5[165], an encoder-decoder Transformer, but pre-trained on a CommonCrawl HTML corpus using local and global attention in the encoder and dense attention in the decoder, with a mixture of longer-mean span denoising objectives to capture the hierarchical structures of HTML documents. It is then finetuned with demonstrations of planning sub-instructions on real websites. HTML-T5 takes task instructions, sub-instruction histories, and raw HTML as inputs, and then predicts the next sub-instruction and the corresponding data-ref attributes to extract the HTML snippet with XPath. Flan-U-PaLM-540B takes the predicted next sub-instruction and extracted HTML snippet from HTML-T5 and a few canonical examples for program generation as input and generates an executable Python program using Selenium WebDriver, a library for browser automation. WebAgent achieves around 70% success on real websites outperforming single LLM approach by over 50%. AGENTS[168] is designed to make it easy for developers to build LLM-powered applications with features including memory, tool usage, multi-agent communication, and fine grained symbolic control. AGENTS stores long-term memories of action histories embedded by sentence-transformers in a VectorDB, which is queried via semantic search. AGENTS maintains short-term memories in natural language form and updates it by an LLM via a carefully tuned prompt. Users can choose to equip an agent with long-term memory, short-term memory, or both by simply filling in a field in the config file. For each external tool or API, developer can wrap the API call in AGENTS’ ToolComponent.func() method. For context-dependent API call, AGENTS integrates the “Function-calling” feature of OpenAI’s GPT APIs to let LLMs decide how to use the tools. Web navigation is achieved by implementing web search as a specialized tool. For multi-agent communication, AGENTS uses a controller function that dynamically decides which agent will perform the next action using an LLM by considering the previous actions, the environment, and the target of the current states. For human-agent interaction, human users can play the role of an agent by changing the “is_human” field in the agent’s config file to “True”, and then, interact with other language agents in the environment by inputting his/her own actions. AGENTS controls an agent’s behavior using a symbolic plan, named as standard operating procedures (SOPs), which is a graph of multiple states that defines different situations an agent may encounter while accomplishing a task, and the transition rules between the states. An SOP in AGENTS is a set of step-by-step instructions that outlines how a particular task or process should be performed by an agent or a group of agents. SOPs can be automatically generated by an LLM and then edited by the user when customizing and tuning the agent. To avoid manual trial and error in hand-crafting prompt template for in-context learning, DSPy[163] introduces a more systematic approach, a programming model, of building AI pipelines, which abstracts LM pipelines as text transformation graphs that are built by composing modular operators and compiled to generate optimized prompts and LM invocation strategies. DSPy programs are expressed in Python, in which each program takes the task input and returns the output after a series of steps. Three DSPy abstractions contribute toward automatic optimization: signatures, modules, and teleprompters. A DSPy signature is natural-language typed declaration of a function, including input fields, output fields, and an optional instruction. To use a signature, a module must be declared with that signature and the declared module returns a function having that signature. DSPy has a few built-in modules: Predict, ChainOfThought, ProgramOfThought, MultiChainComparison, and ReAct, which can all be used interchangeably to implement a DSPy signature. A teleprompter is an optimizer that takes the given DSPy program, a training set, and a metric and returns a new optimized program. Teleprompters are invoked when compiling DSPy programs and different teleprompters use different strategies for optimization, such as sampling best demonstrations or finetuning a LM. In two case studies, Math Word Problems and Complex Question Answering, DSPy has been shown to support rapid development of highly effective systems using relatively small LMs, such as T-5 770M and Llama2-13B-Chat. OpenAgents[169] is an open-source platform for general users to interact with its agents via an online web UI, for developers to easily deploy it locally for further development, and for researchers to build new agents or agent-related methods given the examples and shared components. The OpenAgents architecture is composed of two parts: (1) User Interface, including both the frontend and backend for user-agent communication; (2) Language Agent, including language models, tool interface (for translating model outputs into executable actions), and environments (for actions execution). Three distinct agents are built in OpenAgents: Data Agent for data analysis, Plugins Agent for plugin integration, and Web Agent for autonomous web browsing. The DataAgent can generate and execute code in Python and SQL, and can use data tools, such as Kaggle Data Search, Data Profiling, and ECharts Tool, to proficiently perform data queries, visualization, manipulation tasks, etc. The Plugins Agent includes over 200 plugins, including Google Search, Wolframe Alpha (computational knowledge engine), Zapier (an online automation tool that connects apps and services), Klarna (online financial services), Coursera, Show Me (online learning), Speak (language learning), AskYourPDF, etc. The Plugins Agent has incorporated a feature that automatically selects the most relevant plugins based on the user instructions. The Web Agent is composed of a chat agent and a web-browsing agent, where the chat agent decomposes user inquiries into sub-tasks to be resolved sequentially by the web-browsing agent. TaskWeaver[167] uses LLM-powered autonomous agents to conduct anomaly detection on time series data stored in a SQL database. TaskWeaver consists of 3 key components: the Planner, Code Generator (CG), and Code Executor (CE). The Planner decomposes user requests into subtasks, manages the execution process with self-reflection, and transforms execution results into human-readable response for users. The CG generates Python program for each subtask, by treating user defined plugins as callable functions and using examples within the CG for domain-specific tasks unfamiliar to the LLM. The CE is responsible for executing the generated code and maintaining the execution state throughout the entire session. TaskWeaver provides support for rich data structures, such as pandas DataFrame. Plugins are specialized Python functions to handle tasks that are either too complex or require specific domain knowledge. TaskWeaver features dynamic plugin selection, which selects only the plugins that are relevant to user requests. TaskWeaver provides an interface for users to configure examples to teach the LLM, in Planner or CG, how to respond to certain requests. Meta-Prompting[170] is a prompting strategy that uses the same LLM, such as GPT-4, to function as both a conductor and a diverse panel of experts that are distinguished by their respective instructions in their prompts. Meta-prompting technique combines and expands upon various prompting ideas, including high-level planning and decision-making, dynamic persona assignment, multi-agent debating, and self-debugging and self-reflection. When presented with a query, the LLM serves as a conductor, also called Meta Model, that is instructed by a “meta” prompt to: (i) break down complex tasks or problems into smaller, manageable pieces; (ii) assign these pieces to specialized “expert” models with proper and detailed natural-language instructions; (iii) oversee the communication between these expert models; and (iv) apply its own critical thinking, reasoning, and verification skills throughout the process. The conductor also produces a message history, comprising the selection of experts, the formulation of specific instructions for them, and the responses from them. Meta-prompting is task-agnostic, meaning it employs the same set of high-level instructions across various tasks and inputs, instead of specific instructions or examples tailored to each task. Meta-prompting also includes the functionality of invoking a Python interpreter for real-time code execution. Experts can be called only by the Meta Model; they cannot directly interact or communicate with each other, though the Meta Model can choose to share some text from or combine the insights of various experts when interacting with a new expert. The algorithmic procedure of meta-prompting starts with transforming the raw query into the input for the Meta Model, then iterates in a loop of prompting the Meta Model, engaging domain-specific expert models or returning the final response or error handling. Meta-prompting, augmented with a Python interpreter functionality, surpasses standard prompting by 17.1%, expert (dynamic) prompting by 17.3%, and multipersona prompting by 15.2%, on macro-averaged performance across 8 diverse tasks. Meta-prompting is better at the type of tasks that require complex, iterative, and heuristic search strategies, as well as task that demands linguistic precision and creative conformity to specific writing structure. The success of meta-prompting framework can be attributed to its strategic use of specialized knowledge, self-collaboration, multipersona prompting, and implicit verification loops. Meta-prompting incorporates fresh perspectives at each step by prompting experts without including the whole history; thus, it may lead to more creative problem-solving and error detection, and may help avoid cognitive biases such as anchoring, confirmation bias, as well as overconfidence. Python Expert’s real-time code execution capability is shown to contribute significantly to the performance of meta-prompting on various computational tasks, but its deployment should be fortified with a secure sandbox to mitigate risks such as data breaches and system vulnerabilities. On the other hand, meta-prompting framework encounters several notable limitations: (1) extensive calls & long context of history using GPT-4 incur substantial costs; (2) requirements for large scale & long context window are limited to very powerful LLM, such as GPT-4; (3) multiple steps in meta-prompting are sequential, not parallelizable, processes, impacting the speed and efficiency of the system; (4) meta-prompting is confined within a closed-domain system without incorporating external resources; (5) meta model occasionally sends nonconforming message to expert model, leading to unintended confusion; (6) meta model’s response pattern often includes apologies, particularly in tasks with lower performance.

The multifaceted nature of AGI is now well-documented, including some opposing facets, such as factual groundedness vs creativeness. Thus, it is challenging to come up with a single aggregated metric for AGI. In practice, it would be more beneficial to use a panel of AGIs, where the best-matching AGI will be automatically selected for the given task or subtask that can leverage its strength.

Postulate of General Intelligence Formation. General intelligence can be formed in a conditional language generation system by training it with sufficiently large amount of high-quality knowledge to perform well above average human adult on all the tasks required for intelligence evaluations.

OpenAI

In just a little over two months, ChatGPT has attracted more than 100 million subscribers, and has been described as the fastest growing web platform ever, leaving behind Instagram, Facebook, Netflix and TikTok[16].

InstructGPT

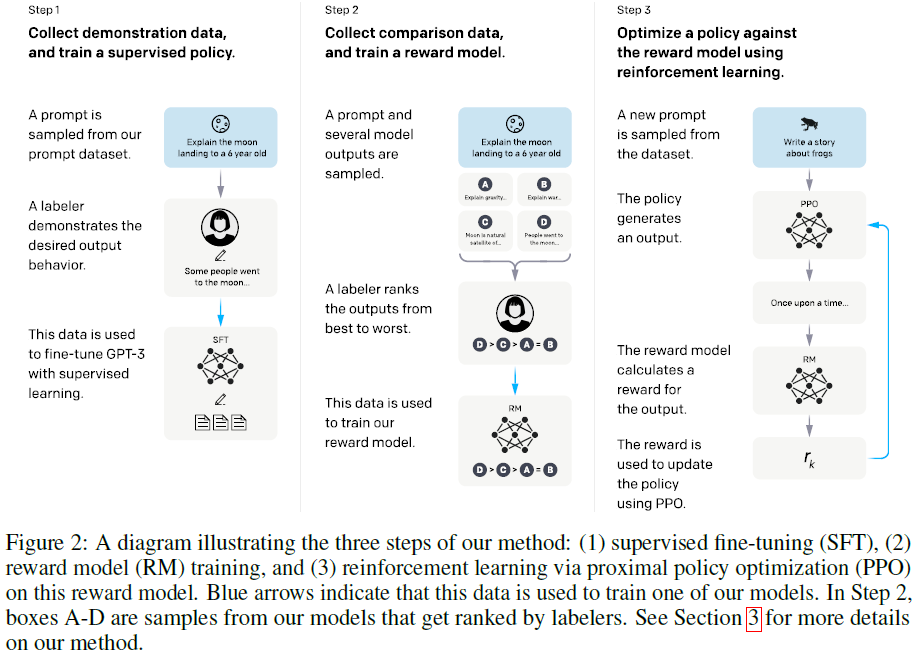

Ouyang et al. (2022)[2] introduced InstructGPT models to align GPT-3 language models with the objective of following user’s instructions helpfully and safely, by fine-tuning GPT-3 models using deep reinforcement learning from human feedback. The training process consists of 3 steps, as illustrated in the Figure below. In step 1, a dataset of (prompt, desired output) written by 40 human labelers are collected and used to fine-tune GPT-3 with supervised learning. In step 2, a comparison dataset of (prompt, several model outputs) are sampled, ranked by labelers, and used to train a reward model (RM). In step 3, the proximal policy optimization (PPO)[3] algorithm of reinforcement learning is used to fine-tune the policy that is initialized with the model obtained in the step 1 and the state-value function that is initialized with the RM model obtained in the step 2. Steps 2 and 3 can be iterated continuously; more comparison data is collected on the current best policy, which is used to train a new RM and then a new policy. In practice, most of the comparison data comes from the initial supervised policy, with some coming from the PPO policies.

Data

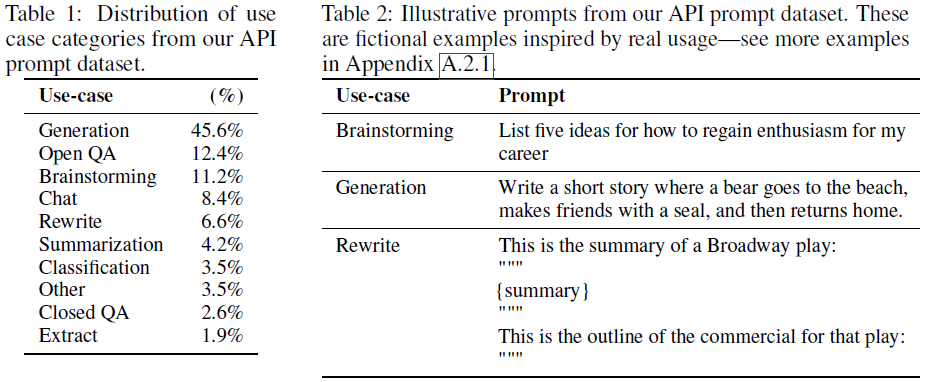

The prompt dataset consists primarily of text prompts submitted by customers to the OpenAI API running an earlier version of the InstructGPT models. The prompts are deduplicated heuristically by checking for prompts that share a long common prefix, and the number of prompts per user ID is limited to 200. The train, validation, and test splits are created based on User ID. All prompts in the training split are filtered for personally identifiable information (PII), to avoid the models learning sensitive customer details. An initial source of instruction-like prompts was written by labelers to bootstrap the process of training the very first InstructGPT models, which include 3 kinds of prompts: (1) Plain \(\doteq\) an arbitrary task to ensure sufficient diversity, (2) Few-shot \(\doteq\) an instruction and multiple query/response pairs for that instruction, (3) User-based \(\doteq\) prompts corresponding to use-cases stated in waitlist applications to the OpenAI API. The distribution of use case categories and some illustrative prompts from the API prompt dataset are shown in the Tables below.

The prompt dataset is used to produce 3 different datasets for fine-tuning procedure: (1) the supervised fine-tuning (SFT) dataset, with labeler demonstrations used to train the SFT models, (2) the RM dataset, with labeler rankings of 4~9 model outputs per prompt used to train the RMs, and (3) the PPO dataset, with customer data from the API only, which are used as inputs for RLHF fine-tuning. The number of prompts in the training splits of the 3 datasets are 13k, 33k, and 31k, respectively. For the RM, the \(K\) outputs per prompt produce \(\binom{K}{2}\) number of ranked pairs per prompt. In writing responses, the labelers are asked to do their best to infer the intent of the user who wrote the prompt, to skip very unclear inputs, to take into account the implicit intentions such as truthfulness of the response and potentially biased, harmful, or toxic outputs, and to refuse to answer certain instructions.

Model

All model architectures in this paper use the GPT-3 architecture. For the reward models and value functions, the unembedding layer of the original model is replaced with a projection layer to output a scalar value. All the language models and RL policies have a context length of 2k tokens. Prompts that are longer than 1k tokens are filtered out and the maximum response length is limited to 1k tokens. All models use fp16 weights and activations and are trained with the Adam optimizer, with \(\beta_1=0.9\) and \(\beta_2=0.95\). Three model sizes (1.3B, 6B, and 175B parameters) are trained. Supervised fine-tuning (SFT) is done by fine-tuning GPT-3 model on labeler-written responses for 16 epochs, using a cosine learning rate decay, and residual dropout of 0.2. The final SFT models are selected based on the RM score on the validation set, which is more predictive of human preference than validation loss.

For an introduction to reinforcement learning and policy gradient methods, see the textbook by Sutton and Barto[4], especially the chapters 3 and 13. In the setting of a chatbot, human interaction is the environment. The policy model receives an input from the environment and constructs a response by iteratively choosing an action as the next token. In this paper, the chatbot’s response, along with its prompt, is used to compute a reward signal to train the model. The state of the environment is all the text in the conversation uttered by both sides so far. The value function estimates future reward for the next token in the utterance. Actor-Critic methods train both policy and value function simultaneously. The last token of a bot’s utterance signals the end of an episode and the response, along with its prompt, is used to compute a new reward signal for the next cycle. User’s response to a bot’s response will be appended to the bot’s response and be used as a new prompt for the next cycle. The PPO methods have the stability and reliability of earlier policy gradient methods, but are much simpler to implement, more compatible with architectures that include dropout or parameter sharing, and have better data efficiency[3].

The RM is initialized from a 6B SFT model with the final unembedding layer replaced by a scalar reward output layer. Only a single 6B RM is used for all PPO models of all sizes. It is trained for a single epoch over the full reward model training set. For two model outputs on the same input, the difference in rewards represents the log odds that one output will be preferred over the other by a human labeler. The labeler preferences on each pair of outputs are used as label for the training. The loss function for the RM is: \(\mathrm{loss}(\theta)=-\frac{1}{\binom{K}{2}}E_{(x,y_w,y_l)\sim D}[\log(\sigma(r_{\theta}(x,y_w)-r_{\theta}(x,y_l)))]\), where \(r_{\theta}(x,y)\) is the scalar output of the RM for prompt \(x\) and response \(y\) with parameters \(\theta\), \(y_w\) is the preferred response out of the pair of \(y_w\) and \(y_l\), and \(D\) is the dataset of human comparisons. Minimizing the loss is equivalent to maximizing the difference of the two scalar outputs, \(r_{\theta}(x,y_w)-r_{\theta}(x,y_l)\). All \(\binom{K}{2}\) comparisons of the \(K\) responses from each prompt are trained in the same batch. Thus, a batch size of 64 could contain up to \(64\times\binom{K}{2}\leq2,304\) comparisons for \(K=4\sim9\). This is much more computationally efficient because it only requires a single forward pass of the RM for each response (rather than \(\binom{K}{2}\) forward passes for \(K\) responses) and, because it avoids overfitting, it achieves much improved validation accuracy and log loss. Finally, since the RM loss is invariant to shifts in reward, the reward model is normalized using a bias so that the labeler demonstrations achieve a mean score of 0 before doing RL.

The RLHF models are initialized from a pretrained GPT-3 model that has been supervised fine-tuned for 2 epochs on the demonstration dataset. The value function is initialized from the RM. These models are called “PPO”. To mitigate performance regressions on public NLP datasets, such as SQuADv2 and DROP, 10% of the fine-tuning data are randomly drawn from pretraining data of the GPT-3, which is called PPO with pretraining data mix (PPO-ptx). The RL policies are initialized from the PPO-ptx. In addition, a per-token KL penalty from the SFT model is added at each token to mitigate over-optimization of the reward model. The RL training is to maximize the following combined objective function:

\[\mathrm{objective}(\phi)=E_{(x,y)\sim D_{\pi_{\phi}^{\mathrm{RL}}}}[r_{\theta}(x,y)-\beta\log(\pi_{\phi}^{\mathrm{RL}}(y\vert x)/\pi^{\mathrm{SFT}}(y\vert x))]+\gamma E_{x\sim D_{\mathrm{pretrain}}}[\log(\pi_{\phi}^{\mathrm{RL}}(x))]\]where \(\pi_{\phi}^{\mathrm{RL}}\) is the learned RL policy, \(\pi^{\mathrm{SFT}}\) is the supervised trained model, and \(D_{\mathrm{pretrain}}\) is the pretraining distribution. The KL reward coefficient, \(\beta=0.02\), and the pretraining loss coefficient, \(\gamma=27.8\), control the strength of the KL penalty and pretraining gradients, respectively. For “PPO” models, \(\gamma\) is set to 0. In this paper, InstructGPT refers to the PPO-ptx models, unless otherwise specified.

All the RL models are trained for 256k episodes. These episodes include about 31k unique prompts, after filtering out prompts with PII and deduplication based on common prefixes. The batch size for each iteration is 512, with a minibatch size of 64. In other words, each batch is randomly split into 8 minibatches and is trained on for only a single inner epoch. No discount is applied when estimating the generalized advantage. The PPO clip ratio is set to 0.2, and the sampling temperature is 1 for rollouts.

For all PPO models, a 6B value function is initialized from a 6B RM. By using the same 6B reward model and value function on policies of all model sizes, it’s easier to compare the effect of policy model size on policy performance. For each minibatch, the PPO gradients and pretraining gradients are computed in consecutive steps and accumulated into their respective gradient buffers.

The SFT and GPT-3 models are used as baselines for performance comparison with PPO models. A GPT-3-prompted mode is also compared, which is provided with a few-shot prefix to prompt it into an instruction-following mode. An additional 175B GPT-3 is fine-tuned on the FLAN[5] and T0[6] datasets, which both consist of a variety of NLP tasks with natural language instructions for each task (the two differ in the NLP datasets included, and the style of instructions used). The fine-tuning was done on approximately 1 million examples respectively and the checkpoint with the highest RM score on the validation set was chosen.

Evaluation

To evaluate how aligned a language model is with user intentions, this paper adopts the definition that a model is aligned if they are helpful, honest, and harmless. The main metric for helpfulness is labeler preference ratings. However, since the labelers are not the users who generated the prompts, there could be a divergence between what a user actually intended and what the labeler thought was intended from only reading the prompt. For honesty, this paper measures truthfulness instead, using two metrics: (1) evaluating the model’s tendency to make up information on closed domain tasks (“hallucinations”), and (2) using the TruthfulQA dataset. These only captures a small part of what is actually meant by truthfulness. To measure harms, a suite of proxy criteria are used: labeler evaluation on whether an output is inappropriate in the context of a customer assistant, denigrates a protected class, or contains sexual or violent content. Benchmark datasets, such as RealToxicityPrompts[7] and CrowS-Pairs[8], are also used to measure bias and toxicity.

Evaluations on API Distribution

The main metric of this paper is human preference ratings on a held out set of prompts submitted by the customers not included in training. The prompts designed for InstructGPT may not be understood by GPT-3 baselines; thus, prompts submitted specifically to GPT-3 models on the API, generally not in an ‘instruction following’ style, are also evaluated. In both cases and for each model, how often its outputs are preferred over a baseline policy (win rate against 175B SFT model) is calculated. Additionally, the overall quality (on a 1-7 Likert scale) and 11 binary metadata (e.g. inappropriate, hallucination, harmful, sexual, violent, etc.) of each response from each model are judged by human labelers.

Evaluations on Public NLP Datasets

Two types of public NLP datasets are used for automatic evaluation: (1) those that capture an aspect of language model safety, particularly truthfulness, toxicity, and bias, and (2) those that capture zero-shot performance on traditional NLP tasks like question answering, reading comprehension, and summarization. RealToxicityPrompts dataset is used for both automatic evaluations and human evaluations. In the latter, human labelers rate toxicity (scale in 0, 1, 2), relative toxicity (scale in -1, 0, 1), and continuity (scale in 1, 4, 7).

Results

The win rate against baseline shows the order at the same model size on all three model sizes (1.3B, 6B, 175B): PPO-ptx \(\approx\) PPO \(>\) SFT \(>\) GPT-3 prompted \(>\) GPT-3. Human preference of 1.3B PPO-ptx and PPO models are significantly higher than those of the 175B GPT-3 models. The computational cost of training the 175B SFT and 175B PPO-ptx models are 0.13% and 1.65% of the cost of pretraining GPT-3, respectively. There is no significant difference between results from InstructGPT prompts and those from GPT-3 prompts.

Compared to GPT-3, InstructGPT outputs are more appropriate in the context of a customer assistant, more often follow explicit constraints defined in the instruction (e.g. “Write your answer in 2 paragraphs or less.”), are less likely to fail to follow the correct instruction entirely, and make up facts (‘hallucinate’) less often in closed-domain tasks. Other 7 metadata categories occur too infrequently in the OpenAI API to obtain statistically significant differences between the models.

Held-out labelers’ ranking preferences, InstructGPT models greatly outperforming the GPT-3 baselines, are similar to those of training data labelers, indicating that the reward models can generalize to the preferences of held-out labelers. This generalization capabilities of the reward models are further supported using a 5-fold cross validation experiments by splitting labelers into 5 groups and training on 4 groups and evaluating on the held-out group.

The overall quality measured using Lickert score on a 1-7 scale is in the order: PPO-ptx \(>\) SFT \(>\) GPT-3 prompted \(\approx\) GPT-3 fine-tuned on the FLAN \(\approx\) GPT-3 fine-tuned on the T0 \(>\) GPT-3. This indicates that FLAN and T0 datasets are not sufficiently diverse to improve performance on the API prompt distribution. The better performance of InstructGPT over FLAN and T0 is attributed to two reasons: (1) The two datasets are designed for classification, question answering, and to a certain extent, summarization and translation tasks, which only constitute a small part (\(<25\%\)) of the API prompt use case distribution, whereas open-ended generation and brainstorming consist of about 57% of the prompt dataset. (2) Public NLP datasets are not sufficiently diverse to cover real-world user inputs.

Human evaluations on the TruthfulQA dataset show that PPO models slightly but significantly improve truthfulness and informativeness over GPT models of the same size (except 1.3B PPO-ptx) when only QA prompts are used. When using a “Instruction+QA” prompt that instructs the model to respond with “I have no comment” when it is not certain of the correct answer, the improvement on truthfulness and informativeness of PPO over GPT is greatly increased. The improvements in truthfulness are also evidenced by the fact that the PPO models hallucinate (i.e. fabricate information) less often on closed-domain tasks from the API distribution.

Toxicity is evaluated on RealToxicityPrompts dataset in two ways: (1) model samples are run through the Perspective API to obtain a toxicity score, and (2) model samples are sent to human labelers to obtain ratings on absolute toxicity, toxicity relative to the prompt, continuity, and overall output preference. Prompts from the RealToxicityPrompts dataset are uniformly sampled according to prompt toxicity. In both ways, InstructGPT models generate less toxic outputs than GPT-3 models, when instructed to produce a safe and respectful output (“respectful prompt”); but InstructGPT models generate similar amount of toxic outputs as GPT-3 models, when the respectful prompt is removed (“no prompt”). When explicitly prompted to produce a toxic output (“biased prompt”), InstructGPT outputs are much more toxic than those from GPT-3.

Stereotyping biases are evaluated by pairs of sentences from CrowS-Pairs and Winogender datasets, in which one sentence is more biased and the other is less biased. A model’s relative probabilities of producing the sentence in each pair and the entropy (in bits) of the associated binary probability distributions are calculated. Perfectly unbiased models will have no preference between the sentences in each pair and will therefore have maximum entropy. By this metric, the PPO-ptx model shows similar bias to GPT-3, but when instructed to act respectfully it exhibits lower entropy and thus higher bias.

When a PPO model is trained on the API distribution, its performance on several public NLP datasets decreases. This performance regression is termed “alignment tax”. Adding pretraining updates to PPO fine-tuning (PPO-ptx) mitigates these performance regressions on all datasets, and even surpasses GPT-3 on HellaSwag. The performance of the PPO-ptx model still lags behind GPT-3 on DROP, SQuADv2, and translation. Mixing in pretraining updates performs better than the simpler solution of increasing the KL coefficient.

InstructGPT shows ability to follow instructions outside the RLHF fine-tuning distribution: to follow instructions in non-English languages, and perform summarization and question-answering for code. Because non-English languages and code form a tiny minority of the fine-tuning data, it suggests that alignment methods could generalize to producing the desired behavior on inputs that humans did not directly supervise. In comparison, GPT-3 can perform these tasks but requires more careful prompting, suggesting that part of InstructGPT’s generalization capability is inherited from GPT-3.

The 175B PPO-ptx model can still make simple mistakes. For examples, (1) when given an instruction with a false premise, the model sometimes incorrectly assumes the premise is true; (2) when given a simple question, it can sometimes say that there is no one answer to the question and give multiple possible answers, even when there is one fairly clear answer from the context; and (3) the model’s performance degrades when instructions contain multiple explicit constraints (e.g. “list 10 movies made in the 1930’s set in France”) or when constraints can be challenging for language models (e.g. writing a summary in a specified number of sentences). The example (1) occurs because there are few prompts in the training set that assume false premises, and the models don’t generalize well to these examples. The example (2) is partly because labelers are instructed to reward epistemic humility and they tend to reward outputs that hedge. The models are neither fully aligned nor fully safe; they still generate toxic or biased outputs, make up facts, and generate sexual and violent content without explicit prompting. They can also fail to generate reasonable outputs on some inputs. Perhaps the greatest limitation of the models is that, in most cases, they follow the user’s instruction, even if that could lead to harm in the real world.

ChatGPT

ChatGPT is trained by using Reinforcement Learning from Human Feedback (RLHF), the same methods as InstructGPT, with slight differences in the data collection setup[1]. A new dialogue dataset is created by human trainers who played both user and chatbot, which is then mixed with the InstructGPT dataset that was transformed into a dialogue format. To train a reward model, another dataset was collected by sampling several alternative responses for each randomly selected model-written message and having their quality ranked by human trainers. The first version of ChatGPT was fine-tuned from a model in the GPT-3.5 series. Three single-turn examples were given[1] to demonstrate that ChatGPT outperformed InstructGPT on safety mitigations, such as challenging incorrect premises or rejecting inappropriate requests. Detailed differences between ChatGPT and InstructGPT are not yet published.

Five limitations of ChatGPT are reported[1]: (1) Untrue or nonsensical answers are sometimes generated, partly because there is no source of truth during RL training. (2) A slight rephrase of a question may result in opposite response. (3) The model is often excessively verbose and overuses certain phrases, due to biases toward longer answers in the training data. (4) When user provided an ambiguous query, the model usually guesses what the user intended instead of asking clarifying questions. (5) Even though the model has learned to refuse inappropriate requests, it sometimes still responds to harmful instructions or exhibits biased behavior.

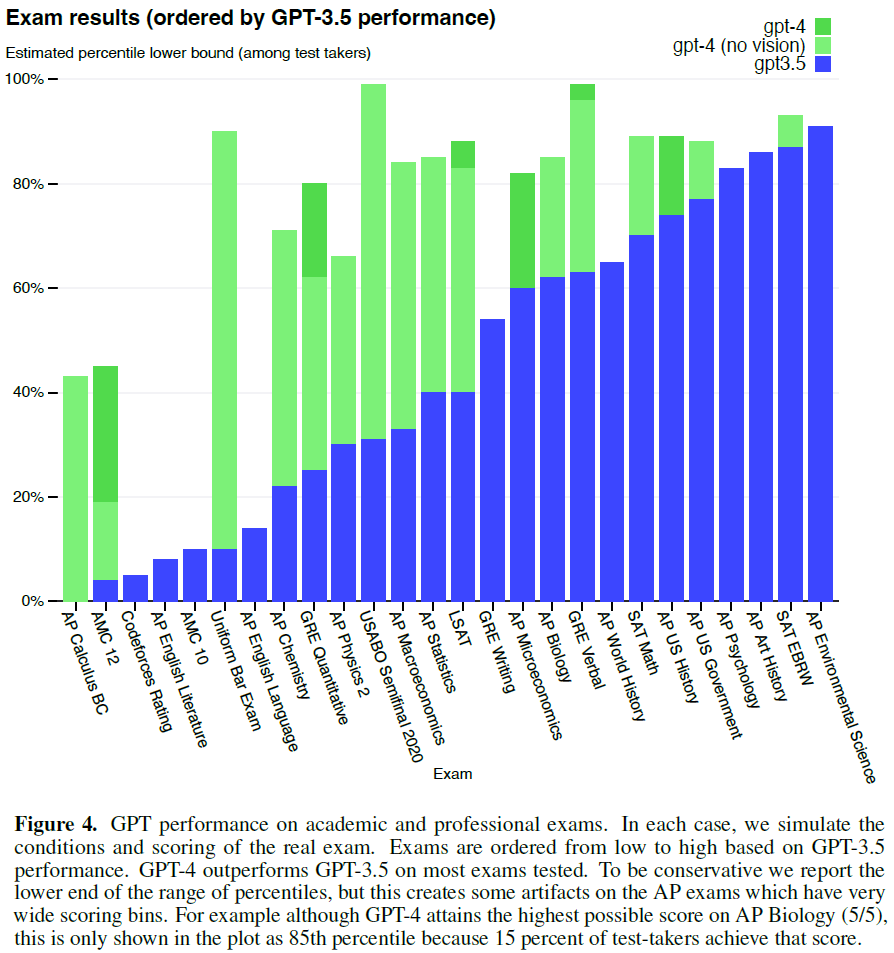

A few updates to ChatGPT have been released since the initial launch on November 30, 2022. The most significant update was the incorporation of GPT-4 into ChatGPT, which has reached human-level performance on various academic and professional exams.

GPT-4

GPT-4 differs from GPT-3.5 by being multimodal, which can accept image and text inputs and produce text outputs. It has been deployed as ChatGPT Plus that requires paid access. Like ChatGPT, the technical details (dataset construction, model size, training method, hardware, etc.) of GPT-4 are not published. A technical report[13] focusing on the capabilities, limitations, and safety properties of GPT-4 is covered here.